How to Interpret and Use the MITRE ATT&CK Framework Evaluations Results

Every spring, MITRE Engenuity releases its evaluation results to the public. MITRE does not assign scores, rankings, or ratings to these scores. Organizations and vendors can use the data to conduct their analysis and interpretation, none of which are endorsed or validated by MITRE Engenuity.

In this blog, we provide an overview of how the evaluations work, ways to interpret the results, and provide our perspective on how to select an Endpoint Security vendor.

How the MITRE ATT&CK Evaluations work

As stated on MITRE’s website, the evaluations show how each vendor approaches threat detection through the language and structure of the MITRE ATT&CK® knowledge base. In essence, this means that they are testing how well vendors can detect, enrich, and map the tactics, techniques, and procedures (TTPs) to the MITRE ATT&CK Framework.

Participating vendors have months to prepare ahead of time. Some opt to use this time to devote resources to prepare for the test, creating rules specific to the evaluation criteria and operating system. Others choose to see how it performs at its base level and don’t dedicate resources to customizing their product for the test.

MITRE typically tests two different scenarios for the evaluation spread over two days. On day one, MITRE conducts the test and the vendor can see what their product detected, as well as if their detection rules were mapped correctly. Armed with this information, the vendor can take time to make minor modifications to prepare for day two, including updating detection logic to get additional credits by mapping to the MITRE Framework.

Ways to interpret the MITRE ATT&CK Evaluations results

At face level, the easiest way to interpret the results would be to take the Evaluation Summary scores, including Analytic Coverage, Telemetry Coverage, and Visibility, and compare how vendors performed. However, this doesn’t consider the unique ways that different vendors approach threat detection. Some vendors' goal is to limit noise, so they don't create alerts for every discovery technique.

For example, if the Discovery tactic is in scope for the evaluation, rules that look for local accounts or file shares in a production environment could be incredibly noisy and produce a lot of false positives. However, to score well in MITRE evaluations you need to alert every time a scenario is ran and map it to the MITRE Framework. Currently, MITRE does not penalize for false positives. This means organizations can create very noisy rulesets to score higher, when in reality the alert fatigue from such sensitive rules would negate any benefit.

You should dig deeper into the evaluations than just the high-level summaries. To start, organizations should look at what is in the scope of the test. You need to consider if the threats MITRE is testing align with what your organization needs protection against. Not everything tested applies to every different organization.

Next, you should dig deeper into the results based on the attacker TTPs most relevant to your organization. MITRE provides charts, graphs, and details on the various TTPs. Review these to fully understand the results. There are times when one vendor may detect only the technique, tactic, telemetry, or general. MITRE scores these based on how well the different rules map to the MITRE ATT&CK Framework.

The last thing to look at is the tool itself and the user interface. Within MITRE’s Evaluation Overview, they provide screenshots of the tool, the detection rule triggered, etc. There are several questions to ask when looking at the screenshots, including:

- Do I understand what the product is showing?

- Can I interpret the data?

- Is it confusing to use?

As you go through this exercise, compare your answers across the different products. Ease of use and knowing how to get the most out of the product is critical.

One last thing to note, as you review all the information MITRE provides, you should also look at year-over-year performance. Look for trends in performance, such as if they are improving each year. This is a key bellwether that indicates if they are continuing to invest and improve the product or not.

How to select an endpoint security vendor

The MITRE Evaluation results are just one dimension to consider when evaluating which product works best for you. In fact, Forrester in their recent Forrester WaveTM of Endpoint Detection And Response Providers, weights MITRE Alignment at only 5%. It's important to take stock of the complete security picture. As you evaluate different offerings, you need to ask:

- What sort of impact does it have on performance?

- What capabilities does it include?

- How much does it cost?

- Is it both an Endpoint Detection & Response (EDR) and a Next-Generation Antivirus (NGAV)? Does it cost extra to use both?

- Does it include a SIEM or equivalent offering?

- What additional data sources can it integrate with?

It’s also critical to think of an Endpoint Security solution in the broader scope of your security strategy. Endpoint Security represents just one layer of security. Highly effective security programs include several layers of security, spanning prevention, detection, and response. Choosing the appropriate layers for your organization is dependent on your goals, budget, and unique needs.

Todyl + Elastic Security + MITRE 2022

Todyl, via our partnership with Elastic, leverages Elastic’s Endpoint Security as a platform. Elastic performed well in the MITRE evaluation, putting emphasis on fidelity. One very important thing to note, our Detection Engineering team builds on top of and customizes Elastic’s base platform with hundreds of rules, machine learning jobs, and integrated threat intelligence that is not represented in the test results. These are tailored to our partners, their customers, and the additional capabilities of the Todyl Security Platform.

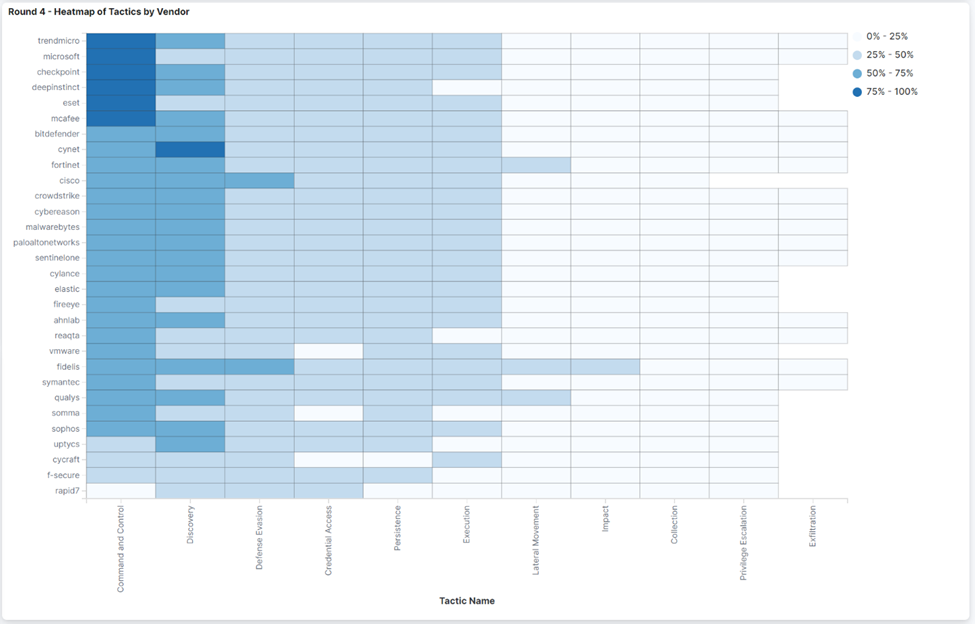

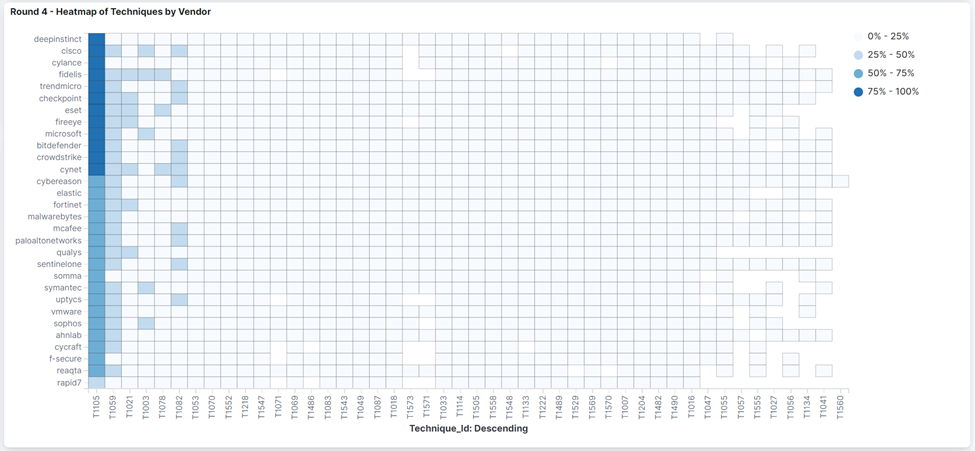

For those interested in a comparison, the charts below compare how vendors performed at detecting the different tactics and techniques in the Wizard Spider and Sandworm test:

General detections refer to when the product detected a suspicious activity occurred (e.g., Excel spawning PowerShell), but the detection rule was not mapped to the MITRE Framework. Elastic had the most general detections of any vendor, highlighting the capability of their platform to detect & prevent behavioral anomalies even when they don't align to the MITRE Framework.

If you’d like to discuss this further, or where the Todyl Security Platform fits into your overall security strategy, contact us today.

See Todyl in Action

Learn how you can protect what you built.

Stay on the Cutting Edge of Security

Subscribe to our newsletter to get our latest insights.